Insights From The Blog

Unity’s New XR Hands Package Adds Hand Tracking Via OpenXR

Few would deny that the point of VR is to emulate the real world to the point where the user doesn’t even realise that they are in a virtual environment. While we are still some way off that idea, every new feature and update is a step forward, and the latest release from Unity gives the option to add hand tracking without the need to go through 3rd party software developer kits (SDK’s) to be able to do so.

Make no mistake; this is a big deal and it will significantly impact the way that developers construct games and applications and will result in an overall increase in realism. Importing the Oculus Integration was a prerequisite for adding controller-free hand tracking support on Quest in the past. In addition to adding prefabs and samples, this integration also adds a wide variety of other features that are unique. However, despite being based on OpenXR, the Oculus Integration’s hand tracking makes use of a proprietary extension and a hand joint bone arrangement that isn’t the industry standard. This means that the developer can create new systems that exploit the hand tracking features without having to rely on a separate software package to ensure that it works correctly.

The term “hand tracking” refers to the process in which the cameras on the headset, the LiDAR array, or external sensor stations track the position, depth, pace, and orientation of your hand. After collecting and analysing this tracking data, the results are translated into a virtual, real-time representation of your hands and the movements they make within the virtual world. This representation is then sent to the application or video game that you are currently playing, which enables you to interact with the world using your hands in a natural and intuitive manner. Typically, hand tracking operates in one of two ways:

- Inside-out tracking. Inside-out tracking is a type of tracking that is unique to standalone virtual reality headsets and originates from the headset itself. In order for this to work, the headset must be equipped with either a series of cameras or LiDAR sensors, and one of those must keep track of the position of your hands in relation to everything else. This information is then processed into raw positional data. Inside-out tracking operates in a manner that is analogous to that of a lighthouse in the sense that a three-dimensional representation of the world needs to be generated from only one direction. This results in a reduction in the quality and precision of the tracking when compared to outside-in tracking, but it liberates the wearer from the requirement that they remain within a particular ‘tracking zone.’

- Outside-in tracking. Systems are referred to as outside-in tracking when the process of tracking is carried out by multiple external sensors – typically using LiDAR or IR technology – that come together to form a “tracking zone.” With outside-in tracking, a much more accurate representation of a person’s position and movements, including their hands, can be generated using data from both the headset and any external sensors that are present. Because your hands are tracked from multiple angles at the same time, it is much simpler to compute a 3D map of your hands within the tracking zone. This results in a more realistic interpretation; however, the necessity of external equipment makes the system much less convenient.

Users are able to interact with virtual objects and environments by using their hands when hand tracking is implemented in virtual reality systems. Although this technology is straightforward to use in the real world, it is extremely complex when applied in a virtual environment. When this goal is reached, the user’s experience of virtual reality becomes more immersive, and the VR becomes more compelling.

The XR Hands package, which is currently in preview, enables hand tracking by utilising both the default XR subsystem that Unity provides as well as OpenXR. This indicates that it can be used in conjunction with other standard systems such as the XR Interaction Toolkit. Quest and HoloLens are currently supported by XR Hands, and Unity has plans to add support for additional OpenXR headsets that include hand tracking.

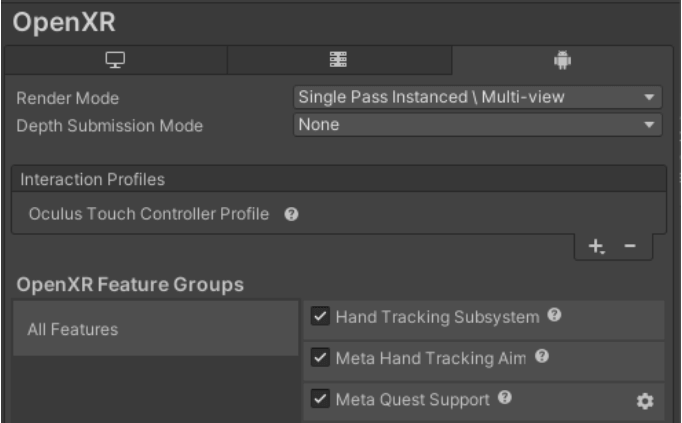

Due to the fact that the package is still in pre-release, it will be necessary for you to manually add it to your project. Additionally, Unity recommends that the package’s manifest be manually updated to OpenXR 1.6.0, and that users turn on the hand tracking extensions in the OpenXR menu. Below is a screenshot of the appropriate menu options in OpenXR. Tick as required to get started.

The ability to include hand tracking easily is a major boost to productivity and will not only make your developments easier to handle but more realistic too, so dive in and start experimenting.